The cost of generating electricity includes the capital cost, the financing charges, and the production or operating costs (including fuel and maintenance of the technology) at the point of connection to an electrical load or the electricity grid. When determining what new plant to build, a utility company will compare all these costs across the slate of available generating units. Once the capital and finance costs are paid, usually after 20 to 30 years, the cost of operation is just the fuel and maintenance costs. As a result, the generating costs for a plant paying sizable capital costs are much different from those for a plant where those costs have been totally paid.

Also affecting the cost of building a plant are regional labor costs, distance from transmission lines, terrain, distance from fuel supplies, and other factors. Therefore, the cost of electricity can vary greatly from plant to plant, even among plants using the same fuel type. Since capital costs are the largest component of electric generation costs for most plants, and because for older plants, these costs have been paid, they produce some of the cheapest electricity in the country — their cost of generating electricity is just their production cost, unless environmental regulations require additional technology to be added during their lifetime.

Production Costs

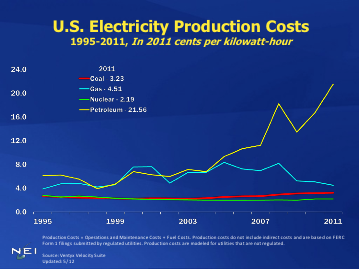

Production costs are much lower than full levelized costs as can be seen from the Nuclear Energy Institute’s website, which provides the production costs for nuclear, coal, natural gas, and petroleum generating units. (Renewable energy sources are not included.) The costs are based on data submitted to the Federal Energy Regulatory Commission (FERC) on the FERC Form 1 by regulated electric utility companies. These costs are shown in the graph below. On average, in 2011, nuclear power had the lowest electricity production costs at 2.10 cents per kilowatt hour, and petroleum had the highest at 21.56 cents per kilowatt hour. However, since few petroleum units are used at that cost (petroleum only produced 0.7 percent of U.S. electricity in 2011), it is better to compare nuclear production costs to coal production costs, which averaged 3.23 cents per kilowatt hour in 2011 and to natural gas production costs which averaged 4.51 cents per kilowatt hour.

Source: www.nei.org

Levelized Costs

Because production costs do not include capital costs or financing charges, production costs are much lower than levelized costs. As noted above, levelized costs represent the total costs of constructing new power plants including their capital and financing charges. A new nuclear power plant, for example, has one of the highest levelized costs, particularly compared to coal and natural gas-fired plants, and its costs are exceeded only by certain renewable plants, such as offshore wind and solar power, according to the Energy Information Administration (EIA).

Levelized costs represent the present value of the total cost of building and operating a generating plant over its financial life, converted to equal annual payments and amortized over expected annual generation based on an assumed duty cycle. The calculation for levelized costs, which is a projection of the cost of various electricity sources, compares the costs of plants beginning operation in the same year, realizing that the construction time of each technology differs. For example, nuclear plants take longer to build than natural gas combined cycle plants or wind installations. The levelized cost includes the capital component, the fixed and variable operation and maintenance components, and a transmission component.

EIA provides an estimate for the levelized costs for each technology it represents in the National Energy Modeling System—the modeling system used to produce the forecasts for the Annual Energy Outlook. These estimates are provided here and in the graph below. The agency provides the costs for dispatchable technologies (such as coal and natural gas) and for non-dispatchable technologies (such as wind and solar) separately because they are not directly comparable. In the graph below, the last 5 technologies shown are non-dispatchable technologies.

System operators must take the generation from non-dispatchable technologies when their generation is available, which is at intermittent intervals, e.g. when the sun shines or the wind blows. Dispatchable technologies are under the control of the system operator who applies them to the grid in the order of least marginal cost as the demand for electricity increases. If the wind stops blowing or the sun stops shining, a dispatchable technology must be available to supply the demand. Thus, non-dispatchable technologies supply energy, but not capacity since they cannot be counted on continually to meet demand. Some analysts believe that a non-dispatchable technology should pay a capacity charge to cover the cost of building and operating the back-up technology when the intermittent technology is unavailable.

An example of this situation occurred recently in California when the state hit its highest demand at 5 pm, when wind was producing a mere 350 megawatts out of an installed wind capacity of 4.3 gigawatts (8.1% of installed capacity). Other technologies had to be called on to supply power at California’s peak load. Wind generation is generally the greatest during the night when demand for electricity is the lowest, so it does little for providing power when that power is most needed. This situation in California is discussed in more detail here.

Because of state mandates requiring a certain percentage of renewable generation, intermittent technologies such as wind and solar are “must run” technologies. Once built, these technologies generally have lower operating costs than fossil fuel and nuclear plants, which also have a fuel cost. How government subsidies are defined also contributes to determining whether a technology is a “must run” technology. For example, wind receives a production tax credit (PTC) of 2.2 cents per kilowatt hour only when it produces electricity. For those wind units under a PTC, their cost of construction would not be subsidized to the same extent if a dispatchable technology were operated instead of the wind unit.

The levelized costs that EIA provides for the year 2017 do not include subsidies or tax credits. Also, for coal plants without carbon, capture and sequestration (CCS) technology, EIA increases their cost of capital by 3-percentage points. The 3-percentage point increase is about equivalent to a $15 per ton carbon dioxide emissions fee, thus making their future cost estimate higher than current project costs. The adjustment represents the implicit hurdle being added to greenhouse gas intensive projects to account for the possibility that they may need to purchase allowances or invest in other greenhouse gas emission-reducing projects that offset their emissions in the future.

Conclusion

Generating costs for technologies differ by the cost to construct, maintain, and operate them as well as the cost to connect them to the grid. To compare these costs for future plants, they are levelized on an annual basis and compared for the same year of starting initial operation. However, this is not an “apples-to-apples” comparison because the load profiles of dispatchable and non-dispatchable technologies differ. Plants that have already paid for their capital and interest charges clearly have the cheapest electricity costs. If plants are forced into closure due to government regulations, the replacement plants will produce much more expensive electricity.

Caution should be used when comparing levelized costs for the reasons noted above and discussed in more detail here.