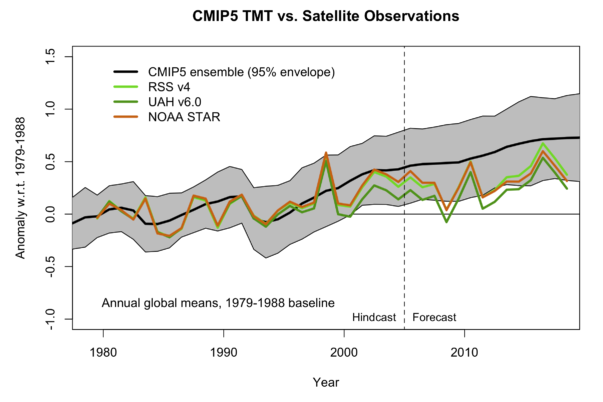

In my first article of this 3-part series on the reliability of the climate models used to guide policy debates, I developed a coin-flipping analogy to make sure the reader understood the concept of a “95% confidence spread.” Then I showed that even using the charts presented by the climate scientists trying to defend the models, it seemed pretty obvious that since the true forecast period of 2005 onward, the suite of climate models being evaluated had predicted too much warming.

The mismatch between the predicted global temperatures and the satellite observations was particularly severe. As I explained in my article: “[N]ot a single data point in any of the three satellite series ever hit the mean projection of global temperature. Furthermore, as of the most recent observation, two out of three of the datasets lie below the 95% spread—and one of these series (UAH v6.0) spent much of the entire forecast period below the spread.” (See figure below.)

In the present article (Part 2 of 3 in the series), I will draw on Judith Curry’s excellent primer on the current state of climate models to show how they actually work, and to explain why there is still so much controversy over long-term predictions. (I should note that Ronald Bailey at reason.com had an extensive summary of the climate models for the layperson back in November. It is well worth reading, though one gets the sense from Bailey’s discussion that the climate models have performed better than the charts in my previous post indicate.)

Yet before I dive into Curry’s primer on climate modeling, let me first make an appeal to academics who are outside the formal field of climate science.

An Appeal to Outside Academics

At this stage in the battles over climate change and specifically government policies that are ostensibly designed to mitigate its harmful effects, it is important for outside academics—especially those in the hard sciences such as physics—to review the state of climate science and tell the public what they think.

There are huge problems with the way the climate change debate has been framed, having to do with (what Hayek called) “the pretense of knowledge” or, in more colloquial language, with the public being bamboozled by smart guys (and gals) in white lab coats.

Suppose the question is, “What is our best guess as to how warm the planet will be, in the year 2100, if we assume XYZ about the pattern of future greenhouse gas emissions?” Then in that case, it is perfectly reasonable for political officials and the average citizen to answer, “I really don’t know, let’s ask the people with PhDs in technical fields, from reputable universities, who have the most peer-reviewed publications in the field of climate change modeling.” Even here, we would want to be aware of controversies among qualified peers in the field, but in any event going to “the leading climate scientists” is a reasonable answer to the question I posed in the beginning of this paragraph.

However, suppose instead the question is, “How much confidence should we place in the answer that these scientists give us?” Now this is an entirely different question. Here, we would no doubt ask the climate scientists themselves to self-assess the accuracy of their projections, but now it is entirely appropriate to ask other experts—especially scientists from other fields—to weigh in on the matter.

It is an obvious character flaw in many intellectuals (myself included) that we are quite proud of our academic prowess, and thus tend to overestimate our abilities. So the issue isn’t, “Are the climate scientists smart?” The issue is: “Are they as smart as they think they are?”

If a university is trying to determine whether to increase funding of (say) gender studies, non-Euclidean geometry, ballet, or computer programming, it would obviously be incredibly naïve to merely ask the faculty from those areas. The university officials would no doubt ask representatives from the candidate programs to speak up in their behalf, but it would also be necessary to rely on input from people outside the fields to assess their relative importance.

Likewise, someone who was attracted to climate science as a young student, then spent years training in a relevant field and has now made a career publishing with climate models, isn’t the most objective person to ask, “How much significance should we attribute to the results of these models?”

The problem is only magnified when intellectuals take the results of their formal models and then just assume that government intervention is the correct response. Ironically, many of the most vocal climate activists are also leftist progressives, who recognize the harms of the Drug War. And yet, when they diagnose that humanity is “addicted to fossil fuels,” they recommend much harsher government crackdowns than they do in the case of humans who are addicted to heroin.

Look, I’m an economist. I know all about smart people smuggling in ideological assumptions while hiding behind formal, “scientific” models that don’t actually prove what they claim. You could say it’s in my job description to recognize this type of behavior.

And so I reiterate my plea to outside academics, especially those in the hard sciences, to check themselves on the state of the climate models, to independently assess whether you think it’s wise to use these computer simulations to justify trillions of dollars in new taxes and/or major interventions in the energy and transportation sectors.

Again, the issue isn’t, “Can you make a better model?” Rather, the issue is, “Just how good are the models so far?” That latter question is not one we can let the model creators answer for us.

Judith Curry’s Primer

In 2017, climate scientist Judith Curry published a 30-page primer for the Global Warming Policy Foundation entitled, “Climate models for the layman.” For those unfamiliar with her, Dr. Curry was formerly the chair of the School of Earth and Atmospheric Sciences at the Georgia Institute of Technology.

At one point Curry was in the “mainstream” of climate science, but over time she began questioning the extreme claims made by some of her peers. She is now sympathetic to the “lukewarmers” (though she still thinks we are plagued by uncertainty), and the tribal fighting she saw among some of her former colleagues has reinforced her gradually growing suspicion that maybe the much-reviled “skeptics” actually had a point.

In the remainder of this post I’ll summarize some of Curry’s most significant points from her primer, though the whole thing is worth reading for those wishing to understand climate science better.

Curry’s Takeaway

Here is an excerpt from the Executive Summary of Curry’s primer:

There is considerable debate over the fidelity and utility of global climate models (GCMs). This debate occurs within the community of climate scientists, who disagree about the amount of weight to give to climate models relative to observational analyses…

• GCMs have not been subject to the rigorous verification and validation that is the norm for engineering and regulatory science.

• There are valid concerns about a fundamental lack of predictability in the complex nonlinear climate system.

• There are numerous arguments supporting the conclusion that climate models are not fit for the purpose of identifying with high confidence the proportion of the 20th century warming that was human-caused as opposed to natural.

• There is growing evidence that climate models predict too much warming from increased atmospheric carbon dioxide.

• The climate model simulation results for the 21st century reported by the Intergovernmental Panel on Climate Change (IPCC) do not include key elements of climate variability, and hence are not useful as projections for how the 21st century climate will actually evolve.

Climate models are useful tools for conducting scientific research to understand the climate system. However…GCMs are not fit for the purpose of attributing the causes of 20th century warming or for predicting global or regional climate change on timescales of decades to centuries, with any high level of confidence. By extension, GCMs are not fit for the purpose of justifying political policies to fundamentally alter world social, economic and energy systems. [Curry 2017, bold added.]

I urge academics who are not climate scientists to at least peruse Curry’s arguments to support her claims quoted above. (And here is a NASA climate scientist at RealClimate.org responding, in case you want to hear the “orthodox” pushback against Curry’s four-part series on why she thinks the IPCC’s confidence in its “attribution” estimate is overplaced.)

The Climate System Is Too Complex for Current Computers

Climate models don’t violate the laws of physics, but—as physicist Sabine Hossenfelder explained in her NYT article last summer—current computers would take too long to solve the equations for a detailed simulation of the actual planet’s climate (including ocean and atmosphere). So in practice, the computer models break Earth’s climate system into a three-dimensional grid. The following diagram from Judith Curry’s primer illustrates this:

In practice, the computer simulations take a shortcut and approximate what occurs in each of these grid components. As Curry explains:

The number of cells in the grid system determines the model ‘resolution’ (or granularity), whereby each grid cell effectively has a uniform temperature, and so on. Common resolutions for GCMs are about 100–200 km in the horizontal direction, 1 km vertically, and a time-stepping resolution typically of 30 min. While at higher resolutions, GCMs represent processes somewhat more realistically, the computing time required to do the calculations increases substantially – a doubling of resolution requires about 10 times more computing power, which is currently infeasible at many climate modelling centers. The coarseness of the model resolution is driven by the available computer resources, with tradeoffs made between model resolution, model complexity, and the length and number of simulations to be conducted. Because of the relatively coarse spatial and temporal resolutions of the models, there are many important processes that occur on scales that are smaller than the model resolution (such as clouds and rainfall…). These subgrid-scale processes are represented using ‘parameterisations’, which are simple formulas that attempt to approximate the actual processes, based on observations or derivations from more detailed process models. These parameterisations are ‘calibrated’ or ‘tuned’ to improve the comparison of the climate model outputs against historical observations.

The actual equations used in the GCM computer codes are only approximations of the physical processes that occur in the climate system. While some of these approximations are highly accurate, others are unavoidably crude. This is because the real processes they represent are either poorly understood or too complex to include in the model given the constraints of the computer system. Of the processes that are most important for climate change, parameterisations related to clouds and precipitation remain the most challenging, and are responsible for the biggest differences between the outputs of different GCMs. [Curry 2017, bold added.]

Now the reader can begin to see why the same modeling teams can come up with different projections of future global warming, even with the same underlying assumptions about greenhouse gas emissions and so forth, and even though they all agree on the basic physical laws driving the climate system.

To get a sense of how far apart the disagreements are, note that mainstream climate scientists aren’t even agreed on whether more clouds will on net make the planet warmer or colder. (!) This is obviously a critical issue, and yet it’s a very complex topic. Indeed, in his reason.com summary piece, Ronald Bailey has an entire section devoted to “The Cloud Wildcard.” Just to give a flavor of the complexity, here’s his opening:

About 30 percent of incoming sunlight is reflected back into space with bright clouds being responsible for somewhere around two-thirds of that albedo effect. In other words, clouds generally tend to cool the earth. However, high thin cirrus clouds don’t reflect much sunlight but they do slow the emission of heat back into space, thus they tend to warm the planet.

Indeed, MIT climate scientist Richard Lindzen—one of the most credentialed of the “skeptics”—has his famous “infrared iris” hypothesis that involves cloud dynamics.

Matching the Historical Observations

How well do the climate models favored by the UN’s IPCC match the historical observations? The following chart comes from Curry’s primer, which she in turn reproduced from the IPCC’s Fifth Assessment Report (AR5). I have also included Curry’s commentary on the chart.

Climate model simulations for the same period [from 1850 to the present] are shown in Figure 3. The modelled global surface temperature matches closely the observed temperatures for the period 1970–2000. However, the climate models do not capture the large warming from 1910 to 1940, the cooling from 1940 to the late 1970s and the flat temperatures in the early 21st century.

The key conclusion of the Fifth Assessment Report is that it is extremely likely that more than half of the warming since 1950 has been caused by humans, and climate model simulations indicate that all of this warming has been caused by humans.

If the warming since 1950 was caused by humans, then what caused the warming during the period 1910–1940? The period 1910–1940 comprises about 40% of the warming since 1900, but is associated with only 10% of the carbon dioxide increase since 1900. Clearly, human emissions of greenhouse gases played little role in this early warming. The mid-century period of slight cooling from 1940 to 1975—referred to as the ‘grand hiatus’—has also not been satisfactorily explained. [Curry pp. 10-11, bold added.]

If you study the reproduced Figure 3 above, in light of Curry’s commentary, you can see why a growing number of scientists—many of whom are quite respectable—are contemplating the possibility that the UN and other “official” bodies have been overstating the climate system’s sensitivity to carbon dioxide.

Remember, there’s “wiggle room” afforded by the computational shortcuts that the professional modelers need to take, due to the coarseness of their grids in representing the Earth’s climate. After the fact, they can look at the history of carbon dioxide concentration, temperature readings, etc. in order to adjust the knobs on their model, in order to get a decent fit.

For example, if the higher range of potency attributed to carbon dioxide is correct, it poses a puzzle for the global cooling that occurred from the 1940s through the 1970s. (This phenomenon was what prompted Leonard Nimoy to warn the public about a coming ice age in 1978.) But wait—something else changed during the 20th century, besides continuously rising atmospheric concentrations of CO2. Namely, the amount of soot in the air from conventional air pollution first increased because of a growing economy, then decreased because of changing practices among advanced countries (popularly attributed to regulations on air quality but see my dissent).

By adjusting the dials on the effect of aerosols in reflecting some of the incoming solar radiation, climate scientists today can make their “hindcasts” of past temperature changes roughly line up with historical observations, while assuming that additional carbon dioxide has a potent effect on driving further warming. But once we leave “hindcasts” and go to a genuine forecast period, the models no longer match the observations very well—as I pointed out in Part 1 of this series.

Conclusion

The people involved in “establishment” climate science are not dumb, and I don’t think they are twirling their mustaches in smoke-filled rooms. However, the creators and biggest fans of the current crop of climate models are not unbiased judges of how much confidence policymakers and the public should be putting in the results that the computers pump out.

There are many scientists—some of whom have awards in climate science and have even participated in previous versions of the IPCC’s reports—who disagree with the claims that climate change poses an existential threat to humanity unless governments enact major new interventions in the energy and transportation sectors. The loudest voices in the alarmist camp, and especially those labeling dissenters as “science deniers,” are bluffing.

In this post I’ve explained the basics of how the climate computer models work, and why scientists are still uncertain about even seemingly basic topics like clouds. There are several parameters that can be tweaked to make the models reasonably match observed temperatures after the fact, but when it comes to genuine forecasts, it seems that the current models still place too much weight on additional carbon dioxide.

In the next and final article in this 3-part series, I will summarize the perspectives of more climate scientists, who are both “for” and “against” the current IPCC orthodoxy. In closing, let me offer one final plea to outside intellectuals to investigate why the establishment figures are claiming “consensus” on what is a fairly radical set of government policy proposals, to see if you think their case is as solid as they claim.